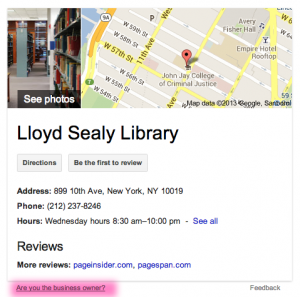

The library where I work and play, Lloyd Sealy Library at John Jay College of Criminal Justice, has had the privilege to have 130+ items scanned and put online by the Internet Archive (thanks METRO! thanks marketing dept at John Jay!). These range from John Jay yearbooks to Alger Hiss trial documents to my favorites, the NYPD Annual Reports (great images and early data viz).

For each scanned book, IA generates master and derivative JPEG2000 files, a PDF, Kindle/Epub/Daisy ebooks, OCR’d text, GIFs, and a DjVu document (see example file list). IA does a great job scanning and letting us do QA, but because they load the content en masse to the internet, there’s no real reason to give us hard copies or a disk drive full of the files. But we do want them, because we want offline access to these digital derivatives of items we own.

The Programming Historian published another fantastic post this month: Data Mining the Internet Archive Collection. In it, Caleb McDaniel walks us through the internetarchive Python library and how to explore and download items in a collection.

I adapted some of his example Python scripts to download all 133 items in John Jay’s IA collection at once, without having to write lots of code myself or visit each page. Awesome! I’ve posted the code to my Github (sorry in advance for having a ‘miscellaneous’ folder, I know that is very bad) and copied it below.

Note that:

- it will take HOURS to download all items, like an hour each, since the files (especially the master JP2s) can be quite large, plus IA probably controls download requests to avoid overloading their servers.

- before running, you’ll need to

sudo pip install internetarchivein Terminal (if using a Mac) or do whatever is the equivalent with Windows for the internetarchive Python library. - your files will download into their own folders, under the IA identifier, wherever you save this .py file

## downloads all items in a given Internet Archive collection

## See http://programminghistorian.org/lessons/data-mining-the-internet-archive for more detailed info

import internetarchive as ia

coll = ia.Search('collection:xxxxxxxx') #fill this in -- searches for the ID of a collection in IA

## example of collection page: https://archive.org/details/johnjaycollegeofcriminaljustice

## the collection ID for that page is johnjaycollegeofcriminaljustice

## you can tell a page is a collection if it has a 'Spotlight Item' on the left

num = 0

for result in coll.results(): #for all items in a collection

num = num + 1 #item count

itemid = result['identifier']

print 'Downloading: #' + str(num) + '\t' + itemid

item = ia.Item(itemid)

item.download() #download all associated files (large!)

print '\t\t Download success.'