Backstory

In my text mining class at GSLIS, we had a lot of ground to cover. It was easy enough to jump into Oracle SQL Developer and Data Miner and plug into the Oracle database that had been set up for us, and we moved on to processing and classifying merrily. But now, a year later, I’m totally removed from that infrastructure. I wanted to review my work from that class before heading to EMDA next (!) week, but reacquainting myself with Data Miner would require setting up the whole environment first. Not totally understanding the Oracle ecosystem, I thought it would be easy enough to set a VirtualBox and implement the Linux setup as needed, but after several failures I gave up and decided to try something new. As it turns out, MALLET not only does classification, but topic modeling, too — something I’d never done before.

What is?

Here’s how I understand it: topic modeling, like other text mining techniques, considers text as a ‘bag of words’ that is more or less organized. It draws out clusters of words (topics) that appear to be related because they statistically occur near each other. We’ve all been subjected to wordles — this is like DIY wordles that can get very specific and can seem to approach semantic understanding with statistics alone.

One tool that DH folks mention often is MALLET, the MAchine Learning for LanguagE Toolkit, open-source software developed at UMass Amherst starting in 2002. I was pleased to see that it not only models topics, but does the things I’d wanted Oracle Data Miner to do, too — classify with decision trees, Naïve Bayes, and more. There are many tutorials and papers written on/about MALLET, but the one I picked was Getting Started with Topic Modeling and MALLET from The Programming Historian 2, a project out of CHNM. The tutorial is very easy to follow and approaches the subject with a DH-y literariness.

Exploration

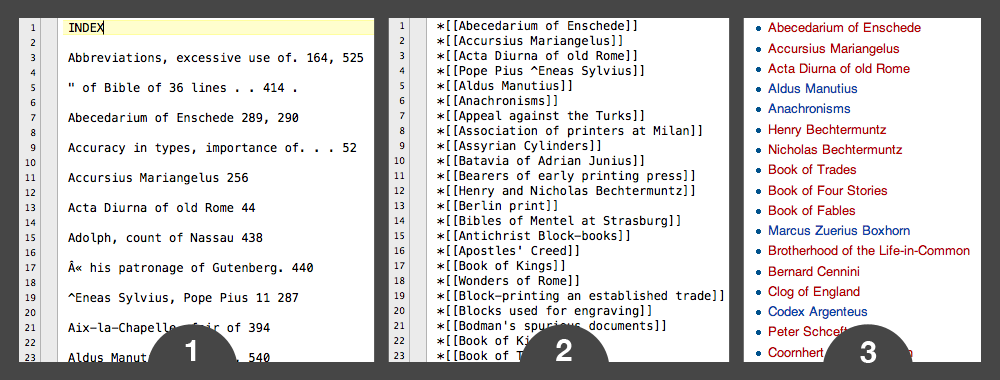

One of my favorite small test texts is personally significant to me — my grandmother’s diary from 1943, which she kept as a 16-year-old girl in Hawaii. I transcribed it and TEI’d it a while ago. I split up my plain-text transcript by month, stripped month and day names from the text (so words wouldn’t necessarily cluster around ‘april’), and imported the 12 .txt files into MALLET. Following the tutorial’s instructions, I ran train-topics and came out with data like this:

|

January home diary school ve today feel god parents war eyes hours friends make esther changed beauty class true man |

February dear girls thing taxi job find wouldn afraid filipino year american beauty live woman movies happened shoes family makes |

March papa don mommy asuna men americans nature realize simply told voice world bus skin ha ago japanese blood diary |

April dear diary town made white fun dressed learn sun hour days rest week blue soldiers navy kids straight pretty |

May dear girls thing taxi job find wouldn afraid filipino year american beauty live woman movies happened shoes family makes |

June papa don mommy asuna men americans nature realize simply told voice world bus skin ha ago japanese blood diary |

|

July red day leave dance min insular top idea half country lose realized servicemen lot breeze ahead appearance change lie |

August betty wahiawa taxi set show mr wanted party mama ve wrong insular helped played dinner food chapman fil hawaiian |

September betty wahiawa taxi set show mr wanted party mama ve wrong insular helped played dinner food chapman fil hawaiian |

October johnny rose nice supper breakfast tiquio lunch lydia office ll raymond theater tonight doesn tomorrow altar kim warm forget |

November didn left papa richard long met told house back felt sat gave hand don sweet called meeting dress miss |

December ray lydia dorm bus lovely couldn caught ramos asked kissed park waikiki close st arm loved xmas held world |

Note that some clusters appear twice. MALLET considers the directory of .txt files as its whole corpus, then spits out which clusters each file is most closely associated with.

As you can see, I should really have taken out ‘dear’ and ‘diary.’ But I can see that these clusters make sense. She begins the diary in mid-January. It’s her first diary ever, so she tends first toward the grandiose, talking about changes in technology and what it means to be American, and later begins to write about the people in her life, like Betty, her roommate, and Tiquio, the creepy taxi driver. In almost all of the clusters, the War shows up somehow. But what I was really looking forward to was seeing how her entries’ topics changed in December, when she began dating Ray, the man who would be my grandfather. Aww.

It’s a small text, in the grand scheme of things, clocking in at around 40,000 words. If you want to see what one historian did with MALLET and a diary kept for 27 years, Cameron Blevins has a very enthusiastic blog post peppered with very nice R visualizations.